CTEM vs BAS (Breach and Attack Simulation)

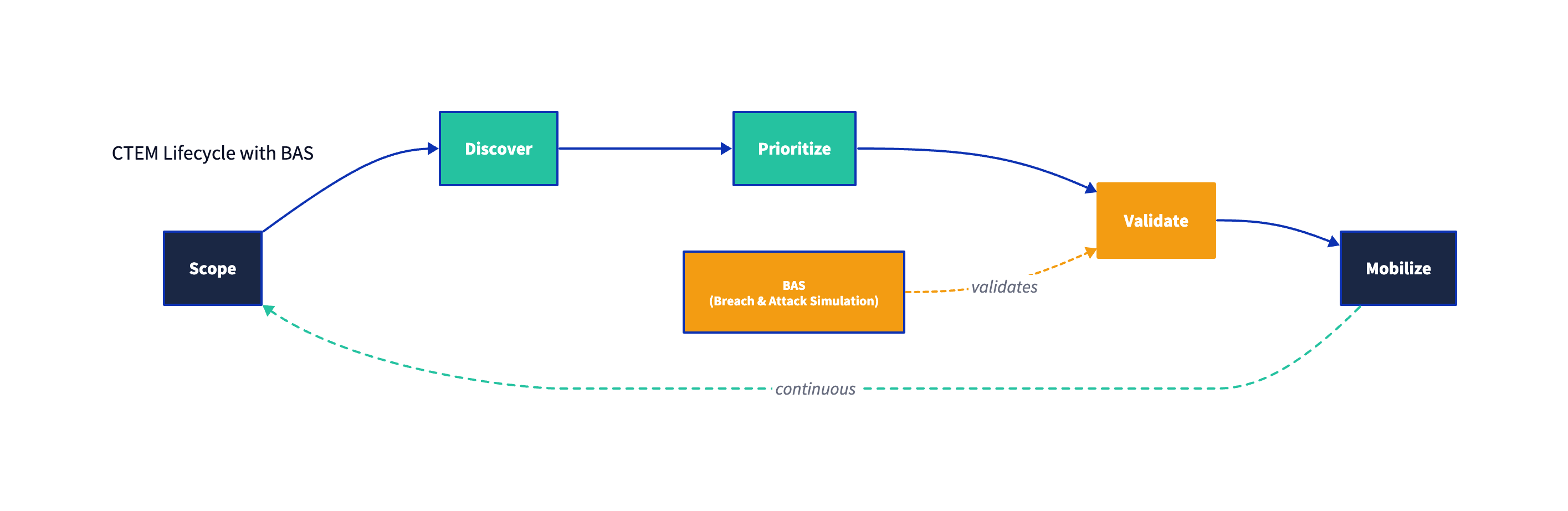

BAS is a validation technique: a way to prove whether controls work. CTEM is a five-stage framework for continuously reducing material exposure. BAS most directly supports the Validate stage of CTEM, while CTEM spans the full exposure management lifecycle.

Overview

Security teams tend to compare CTEM and BAS because both talk about “continuous” and both produce security findings. The problem is that they operate at different layers:

- CTEM (Continuous Threat Exposure Management) is a program: a repeatable operating model for deciding what matters, proving what is exploitable, and driving remediation across teams—not just generating findings.

- BAS (Breach and Attack Simulation) is a technical validation method: controlled simulations that answer, with evidence, whether prevention and detection controls perform as intended against specific adversary behaviors.

In practice, most mature programs use both:

- CTEM provides the decision system (scope → discovery → prioritization → validation → mobilization).

- BAS provides a repeatable source of empirical evidence inside that system, especially in validation and regression testing.

If you want a simple mental model: CTEM decides what to validate and fix; BAS helps prove what actually works (or fails).

Comparison at a glance

| Dimension | CTEM | BAS |

|---|---|---|

| What it is | A five-stage exposure management program | A simulation/validation technique |

| Primary question | “What exposure is most material to the business right now, and what will we do about it?” | “Does this control prevent/detect/respond to this attack technique in our environment?” |

| Output | A risk-driven backlog, remediation plans, ownership, deadlines, and progress metrics | Evidence of control effectiveness, gaps, telemetry, and retest results |

| Success condition | Remediation happens (or risk is explicitly accepted) and exposure trends down | Controls are continuously verified; drift is detected quickly |

| Common failure mode | “Great dashboards, no decisions.” | “Great simulations, no remediation path.” |

CTEM is not “BAS at scale.” BAS is one valid way to generate evidence during validation, but CTEM also relies on governance, discovery, prioritization logic, and cross-functional execution that BAS tools do not (and should not be expected to) provide.

What is BAS?

Breach and Attack Simulation (BAS) is a security testing approach where an organization runs controlled simulations of attacker behaviors to evaluate whether controls and detections work as expected. In other words: BAS “pretends to be the attacker,” then measures what happens in your environment.

BAS commonly aims to produce repeatable, low-risk simulations that can be run:

- on a schedule (e.g., daily/weekly), and/or

- triggered by change (e.g., new EDR policy, firewall rule update, identity configuration drift, new cloud deployment).

What BAS typically validates

A well-run BAS practice focuses on observable outcomes rather than theoretical assurance:

- Prevention evidence: Was a technique blocked?

- Detection evidence: Was an alert generated with the expected fidelity?

- Response evidence: Did the right playbook/action occur, and did it happen fast enough?

- Control drift: Did something that used to work stop working after a change?

BAS scenarios often map to known adversary techniques (commonly aligned to frameworks like MITRE ATT&CK) to support threat-informed coverage and reporting.

BAS vs. penetration testing vs. red/purple teaming

These activities are complementary, not substitutes:

- Penetration testing is typically point-in-time, human-driven exploration of exploitability and business impact.

- Red teaming evaluates detection and response under realistic, multi-stage adversary behavior—often with strong emphasis on operational readiness, not just control mechanics.

- BAS is best at repeatability, breadth, and continuous regression testing. It is less effective at creative chaining, novel exploit development, and social/organizational complexity.

A practical way to position BAS internally: treat it like regression testing for security controls and detections. It’s not the only assurance mechanism—but it’s the one most likely to catch post-change breakage before an adversary does.

Where BAS Fits in CTEM

In Gartner’s five-stage CTEM cycle—Scope → Discover → Prioritize → Validate → Mobilize—BAS aligns most directly with Validate, and secondarily supports Prioritize and Mobilize by providing hard evidence.

How BAS contributes across CTEM stages

| CTEM stage | What CTEM is trying to accomplish | How BAS can help (and where it doesn’t) |

|---|---|---|

| Scope | Decide what assets/processes matter and define boundaries | BAS can inform scoping only indirectly (e.g., showing which control failures are common in a segment). Scope still requires business context. |

| Discover | Identify relevant assets, exposures, misconfigurations, and control posture | BAS can reveal “unknown unknowns” (e.g., missing telemetry, broken detections), but it is not a substitute for asset/exposure discovery. |

| Prioritize | Decide what to fix first based on likelihood + impact + feasibility | BAS can provide evidence of exploitability/control failure that elevates priority, but prioritization still needs business criticality and attack-path context. |

| Validate | Prove what is exploitable and how defenses react | BAS is a primary technique here, alongside penetration testing, threat modeling, and adversary emulation. |

| Mobilize | Make remediation happen across teams | BAS can supply proof and retest results, but CTEM provides the operating model: owners, workflows, SLAs, and decision logging. |

Validation Stage Deep Dive

Validation is where CTEM becomes materially different from “finding management.”

Discovery and prioritization can still be largely inferential—based on scanning, telemetry, and probability models. Validation demands proof: it asks whether a theoretical exposure is actionable for an adversary and whether your defenses meaningfully change the outcome.

What “validation” means in CTEM terms

A rigorous CTEM validation step typically answers three questions:

- Exploitability: Can an attacker realistically exploit this exposure in our environment (given prerequisites and compensating controls)?

- Path feasibility: If exploited, what are the plausible attack paths to high-value assets?

- Defensive outcome: Do our controls prevent, detect, and enable response fast enough to keep business risk within tolerance?

BAS is strong at (3), useful at (1) for many classes of exposure, and only partially addresses (2) unless it is combined with attack-path analysis or adversary emulation planning.

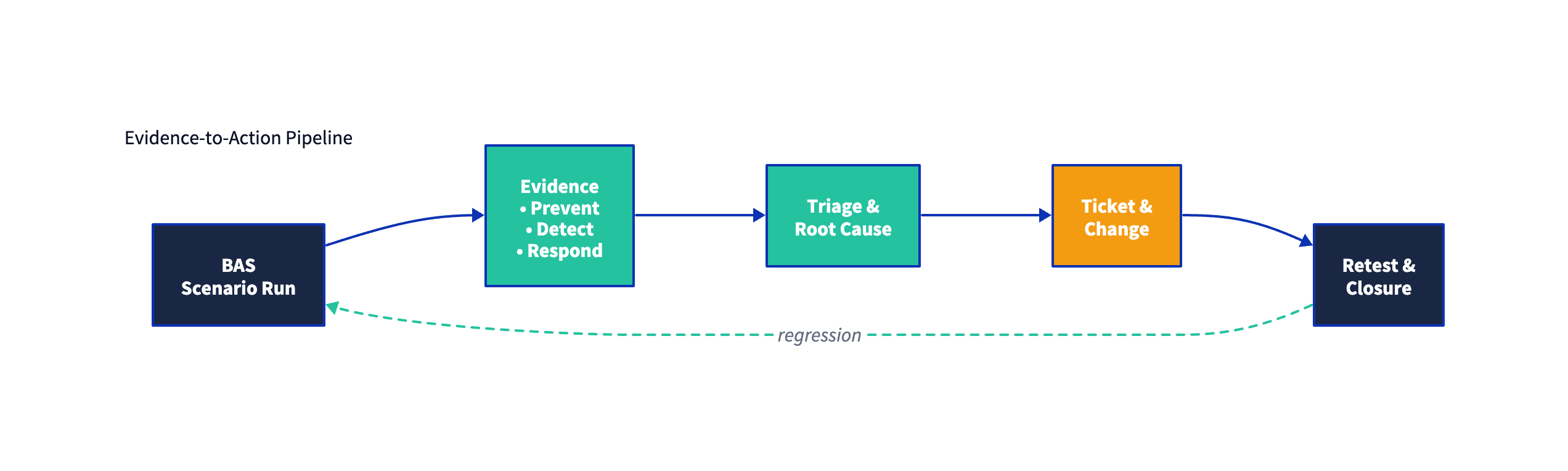

A practical BAS-driven validation workflow (CTEM-aligned)

Below is a pattern that scales without collapsing into “run tests, generate noise”:

-

Select scenarios from prioritized exposures

- Prefer scenarios tied to crown-jewel services, high-impact data, and identity paths.

- Anchor scenarios to a threat model: “Which behaviors would matter if we assume compromise?”

-

Define expected outcomes before you run

- What does “good” look like?

- Prevented at control X

- Detected by control Y with alert Z

- Response action triggered within N minutes

- What does “good” look like?

-

Execute safely

- Run with tight rules of engagement and guardrails (rate limits, maintenance windows, test accounts).

- Ensure the SOC knows what is being tested to avoid false incident escalation.

-

Capture evidence in a form remediation teams can act on

- Evidence should include:

- the technique/behavior tested,

- what did or did not happen (block/detect/respond),

- where it failed (policy, telemetry gap, tuning issue, coverage gap),

- and a concrete next step.

- Evidence should include:

-

Drive remediation through CTEM mobilization

- The finding is not “the BAS result.” The finding is a prioritized exposure with verified impact and an assigned remediation plan.

-

Retest (regression)

- The retest result is what closes the loop and provides a durable metric for progress.

A common mistake is treating BAS output as a “score” to improve. In CTEM terms, the goal is risk reduction, not cosmetic score optimization. Evidence should be traceable to material assets, pathways, and business impact.

Beyond Validation

If BAS is the empirical engine, CTEM is the entire vehicle: steering, navigation, and brakes.

CTEM explicitly includes activities that BAS does not cover well (or at all), including:

1) Scoping with business context

CTEM starts by deciding what matters. That is a governance decision, not a tool output.

A CTEM scope often includes:

- business-critical applications and data flows,

- identity and privileged access paths,

- externally exposed services and SaaS,

- critical third-party dependencies,

- and any “blast radius” systems that would turn a technical compromise into business interruption.

BAS can test within that scope—but it generally cannot define it.

2) Discovery and asset reality

Most environments still struggle with asset truth:

- unmanaged endpoints,

- shadow SaaS,

- stale identities,

- forgotten internet-facing services,

- misaligned ownership.

CTEM assumes discovery is continuous and imperfect, then builds a programmatic loop to keep it improving. BAS can highlight gaps (e.g., “no telemetry from segment X”), but discovery is broader than simulation.

3) Prioritization that survives scale

BAS can identify many weaknesses. CTEM forces a more important question: what are you willing to ignore—explicitly—so you can fix what matters?

Prioritization in CTEM is typically driven by:

- business criticality (impact),

- feasibility of exploitation (likelihood),

- compensating controls (risk reduction already present),

- and operational constraints (what can be changed safely).

4) Mobilization and execution

CTEM’s “mobilize” stage is where many programs either mature or stall.

A serious mobilization model includes:

- clear ownership for each remediation type (app team, IAM, network, cloud platform, endpoint engineering),

- time-bound commitments (SLAs/SLOs that leadership can enforce),

- a defined “risk acceptance” process when remediation is impractical,

- and closed-loop measurement (validated before/after).

BAS can provide retest evidence, but it does not resolve cross-team execution friction—CTEM is designed to.

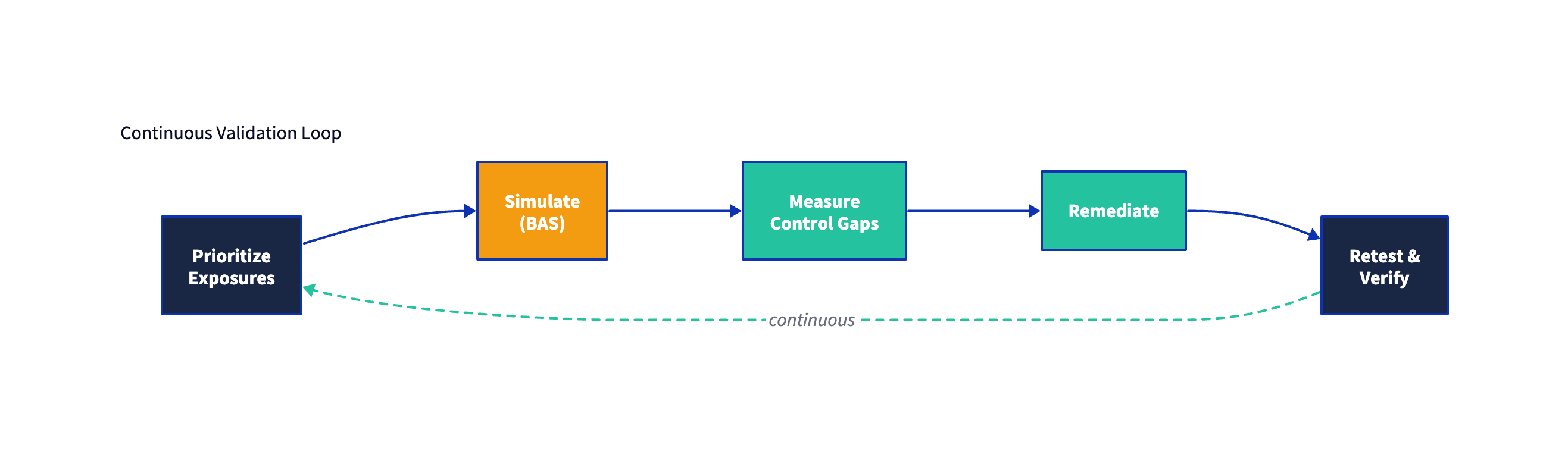

Continuous Validation in CTEM

“Continuous” is not a marketing adjective. It’s a requirement created by change: cloud deployments, identity policy updates, agent upgrades, and detection rule tuning all create drift.

A CTEM program treats validation as a continuous control effectiveness practice, not a yearly exercise.

How to make BAS continuous without making it noisy

A pragmatic model is to classify scenarios into three tiers:

- Tier 1 (always-on regression): small set of high-signal tests tied to crown jewels and critical controls. Run frequently.

- Tier 2 (change-triggered): run when specific changes occur (EDR policy updates, identity changes, major cloud releases).

- Tier 3 (campaign-based): deeper scenario packs tied to emerging threats, seasonal risk, or specific business initiatives.

Trigger events worth wiring into continuous validation

Consider initiating scenario runs when any of the following occur:

- new critical vulnerability affecting in-scope systems,

- configuration drift in identity, endpoint, network, or cloud posture,

- deployment of a new security control or major policy update,

- changes to logging/telemetry pipelines,

- new detections or changes to correlation rules,

- new threat intelligence indicating relevant TTPs.

Metrics that matter (CISO-appropriate, practitioner-usable)

Avoid vanity metrics. Prefer metrics that connect evidence to risk reduction:

- Validated exposure burn-down: how many prioritized exposures moved from “assumed” to “proven fixed” (via retest).

- Control effectiveness drift rate: how often critical controls regress after change.

- Mean time to validate (MTTV): time from identifying a priority exposure to having empirical evidence.

- Mean time to remediate validated exposures (MTTR-V): time from validated failure to verified fix.

- Coverage of critical attack paths: percentage of crown-jewel pathways with recent validation evidence.

Where this aligns with established security practice

The concept of continuously assessing whether controls “operate as intended” and “remain effective over time” is consistent with long-standing guidance on continuous monitoring and ongoing assessment—CTEM applies that philosophy specifically to threat exposure and attack-path reality, and BAS provides one of the most practical mechanisms to generate empirical validation evidence.

Conclusion

CTEM and BAS are not competing ideas; they are complementary layers:

- CTEM is the operating model that ensures exposure work stays tied to business impact and actually results in remediation.

- BAS is one of the most scalable ways to produce continuous, repeatable evidence that security controls and detections behave the way you think they do.

If you already have BAS, CTEM gives you the missing structure: scoping discipline, prioritization logic, and mobilization rigor. If you have CTEM ambitions, BAS can be a powerful validation engine—so long as it is embedded in a loop that turns results into action, retests, and measured risk reduction.

Further reading

- Gartner on the five-stage CTEM cycle: https://www.gartner.com/en/articles/how-to-manage-cybersecurity-threats-not-episodes

- NIST Cybersecurity Framework (CSF) 2.0: https://nvlpubs.nist.gov/nistpubs/CSWP/NIST.CSWP.29.pdf

- NIST SP 800-137 (Information Security Continuous Monitoring): https://nvlpubs.nist.gov/nistpubs/legacy/sp/nistspecialpublication800-137.pdf

- MITRE Caldera documentation (automated adversary emulation / BAS-style exercises): https://caldera.readthedocs.io/

- MITRE Center for Threat-Informed Defense — Adversary Emulation Library: https://ctid.mitre.org/resources/adversary-emulation-library/

- BAS background and terminology (industry overview): https://www.esecurityplanet.com/threats/breach-and-attack-simulation-find-vulnerabilities-before-the-bad-guys-do/

- Cloud Security Alliance perspective on CTEM as a program (history/context): https://cloudsecurityalliance.org/blog/2024/05/24/the-transformative-power-of-continuous-threat-exposure-management-myth-or-reality